9.2 KiB

Explaining RetinaNet

A paper came out in the past few months, Focal Loss for Dense Object Detection, from one of Facebook's teams. The goal of this post is to explain this work a bit as I work through the paper, through some of its references, and one particular implementation in Keras.

Object Detection

"Object detection" as it is used here refers to machine learning models that can not just identify a single object in an image, but can identify and localize multiple objects, like in the below photo taken from Supercharge your Computer Vision models with the TensorFlow Object Detection API:

At the time of writing, the most accurate object-detection methods were based around R-CNN and its variants, and all used two-stage approaches:

- One model proposes a sparse set of locations in the image that probably contain something. Ideally, this contains all objects in the image, but filters out the majority of negative locations (i.e. only background, not foreground).

- Another model, typically a CNN (convolutional neural network), classifies each location in that sparse set as either being foreground and some specific object class (like "kite" or "person" above), or as being background.

Single-stage approaches were also developed, like YOLO, SSD, and OverFeat. These simplified/approximated the two-stage approach by replacing the first step with brute force. That is, instead of generating a sparse set of locations that probably have something of interest, they simply handle all locations, whether or not they likely contain something, by blanketing the entire image in a dense sampling of many locations, many sizes, and many aspect ratios.

This is simpler and faster - but not as accurate as the two-stage approaches.

Training & Class Imbalance

Briefly, the process of training these models requires minimizing some kind of loss function that is based on what the model misclassifies when it is run on some training data. It's preferable to be able to compute some loss over each individual instance, and add all of these losses up to produce an overall loss. (Yes, far more can be said on this, but the details aren't really important here.)

This leads to a problem in one-stage detectors: That dense set of locations that it's classifying usually contains a small number of locations that actually have objects (positives), and a much larger number of locations that are just background and can be very easily classified as being in the background (easy negatives). However, the loss function still adds all of them up - and even if the loss is relatively low for each of the easy negatives, their cumulative loss can drown out the loss from objects that are being misclassified.

That is: A large number of tiny, irrelevant losses overwhelm a smaller number of larger, relevant losses. The paper was a bit terse on this; it took a few re-reads to understand why "easy negatives" were an issue, so hopefully I have this right.

The training process is trying to minimize this loss, and so it is mostly nudging the model to improve where it least needs it (its ability to classify background areas that it already classifies well) and neglecting where it most needs it (its ability to classify the "difficult" objects that it is misclassifying).

This is class imbalance in a nutshell, which the paper gives as the limiting factor for the accuracy of one-stage detectors. While the existing approaches try to tackle it with methods like bootstrapping or hard example mining, the accuracy still is lower.

Focal Loss

So, the point of all this is: A tweak to the loss function can fix this issue, and retain the speed and simplicity of one-stage approaches while surpassing the accuracy of existing two-stage ones.

At least, this is what the paper claims. Their novel loss function is called Focal Loss (as the title references), and it multiplies the normal cross-entropy by a factor, $(1-p_t)^\gamma$, where $p_t$ approaches 1 as the model predicts a higher and higher probability of the correct classification, or 0 for an incorrect one, and $\gamma$ is a "focusing" hyperparameter (they used $\gamma=2$). Intuitively, this scaling makes sense: if a classification is already correct (as in the "easy negatives"), $(1-p_t)^\gamma$ tends toward 0, and so the portion of the loss multiplied by it will likewise tend toward 0.

RetinaNet architecture

The paper gives the name RetinaNet to the network they created which incorporates this focal loss in its training. While it says, "We emphasize that our simple detector achieves top results not based on innovations in network design but due to our novel loss," it is important not to miss that innovations in: they are saying that they didn't need to invent a new network design - not that the network design doesn't matter. Later in the paper, they say that it is in fact crucial that RetinaNet's architecture relies on FPN (Feature Pyramid Network) as its backbone.

Feature Pyramid Network

Another recent paper, Feature Pyramid Networks for Object Detection, describes the basis of this FPN in detail (and, non-coincidentally I'm sure, the paper shares 4 co-authors with the paper this post explores). The paper is fairly concise in describing FPNs; it only takes it around 3 pages to explain their purpose, related work, and their entire design. The remainder shows experimental results and specific applications of FPNs. While it shows FPNs implemented on a particular underlying network (ResNet), they were made purposely to be very simple and adaptable to nearly any kind of CNN.

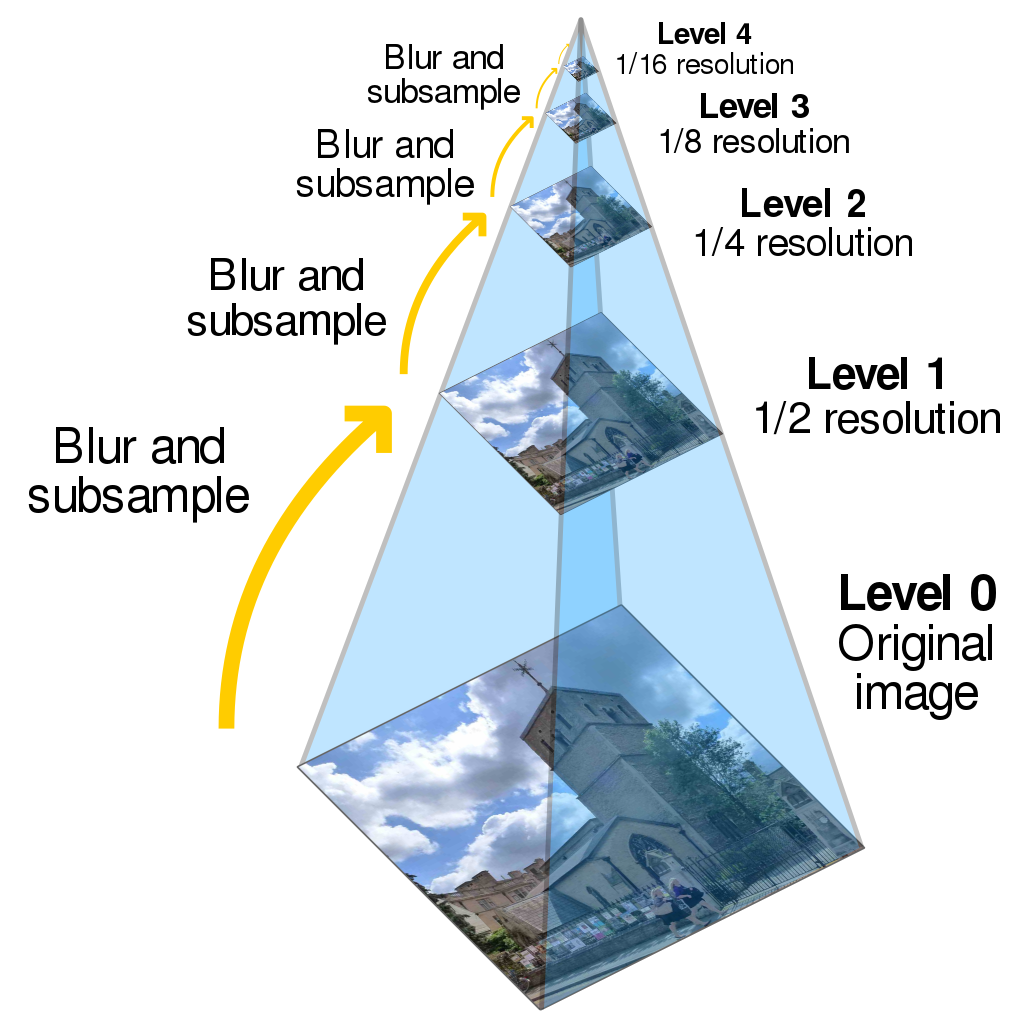

To begin understanding this, start with image pyramids. The below diagram illustrates an image pyramid:

Image pyramids have many uses, but the paper focuses on their use in taking something that works only at a certain scale of image - for instance, an image classification model that only identifies objects that are around 50 pixels across - and adapting it to handle different scales by applying it at every level of the image pyramid. If the model has a little flexibility, some level of the image pyramid is bound to have scaled the object to the correct size that the model can match it.

Typically, though, detection or classification isn't done directly on an image, but rather, the image is converted to some more useful feature space. However, these feature spaces likewise tend to be useful only at a specific scale. This is the rationale behind "featurized image pyramids", or feature pyramids built upon image pyramids, created by converting each level of an image pyramid to that feature space.

The problem with featurized image pyramids, the paper says, is that if you try to use them in CNNs, they drastically slow everything down, and use so much memory as to make normal training impossible.

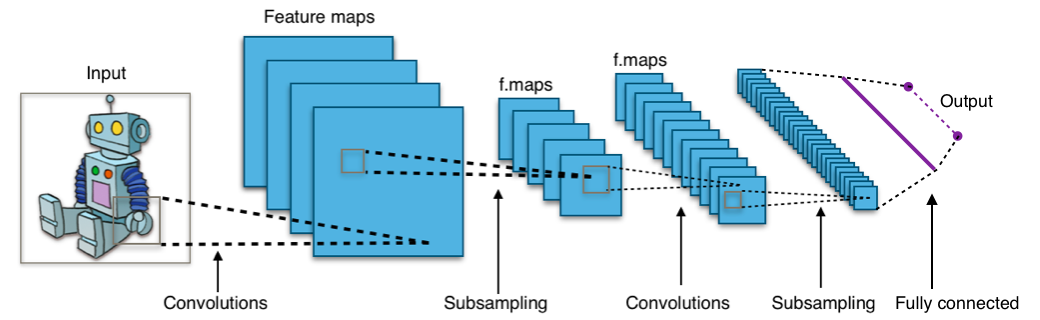

However, take a look below at this generic diagram of a generic deep CNN:

You may notice that this network has a structure that bears some resemblance to an image pyramid. This is because deep CNNs are already computing a sort of pyramid in their convolutional and subsampling stages. In a nutshell, deep CNNs used in image classification push an image through a cascade of feature detectors, and each successive stage contains a feature map that is built out of features in the prior stage - thus producing a feature hierarchy which already is something like a pyramid and contains multiple different scales.

When you move through levels of a featurized image pyramid, only scale should change. When you move through levels of a feature hierarchy described here, scale changes, but so does the meaning of the features. This is the semantic gap the paper references. The meaning changes because each stage builds up more complex features by combining simpler features of the last stage. The first stage, for instance, commonly handles pixel-level features like points, lines or edges at a particular direction. In the final stage, presumably, the model has learned complex enough features that things like "kite" and "person" can be identified.

The goal of FPN was to find a way to exploit this feature hierarchy that is already being computed and to produce something that has similar power to a featurized image pyramid but without too high of a cost in speed, memory, or complexity.